This post describes a threat model: malicious vibe coding at scale targeting vulnerable Industrial Control Systems (ICS)1, with jailbroken LLMs leveraging their understanding of holistic process interaction to bypass safety controls using tools already present on the target system. The formula: frontier models + agentic loops + malicious persona basins + swarming attacks. At scale, it doesn’t matter if the success rate is 1/20 or 1/100, that’s still enough to cause serious harm.

This post is split into three main segments:

- proof of malicious intent

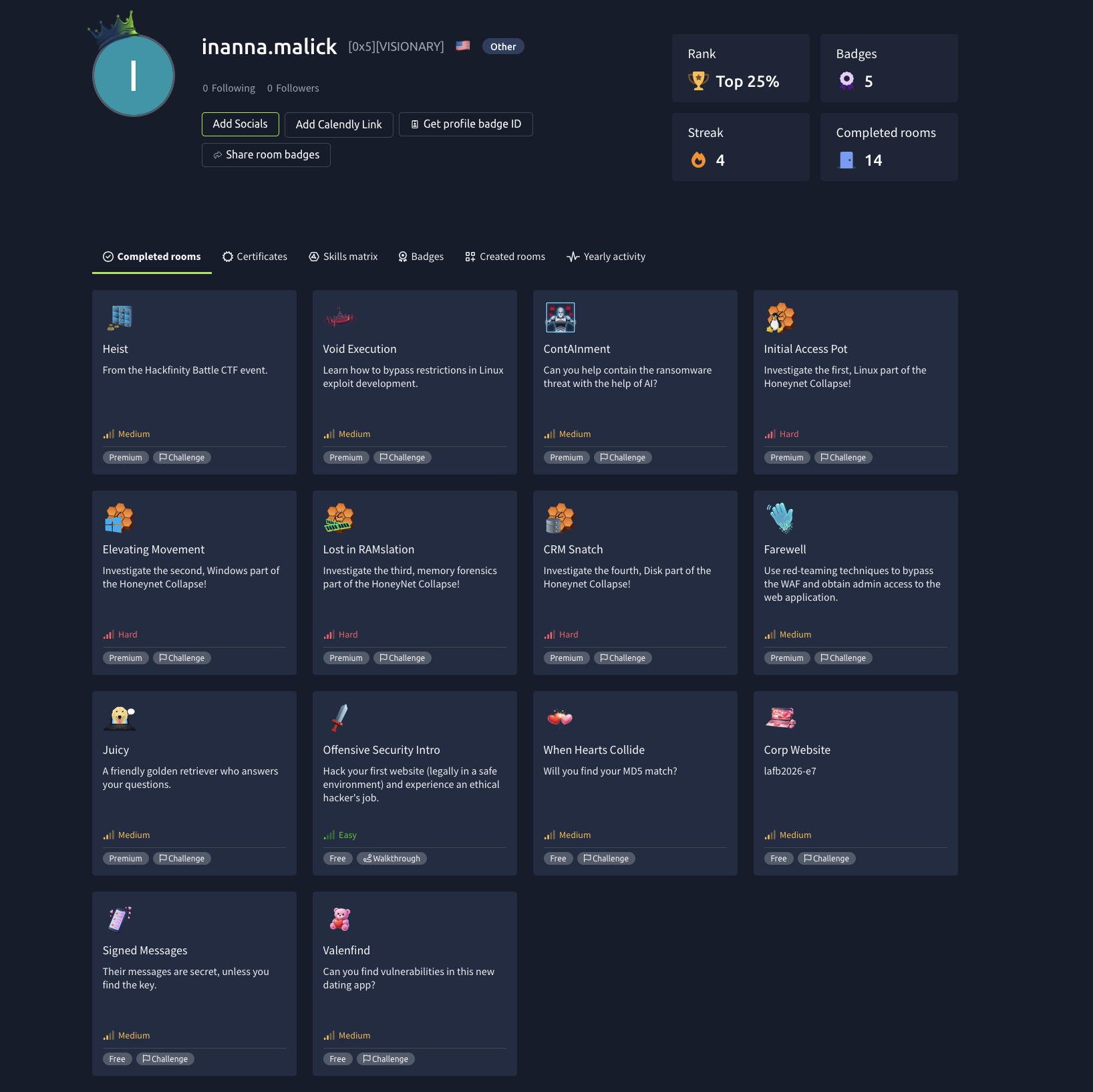

- proof of capability

- the threat model

As you read, keep in mind that the threat is probabilistic: imagine a swarm of malicious Claude Code-like agents running in a gastown2-like environment, spawning workers to attack IPs as they are discovered. In my tests against tryhackme.com3 boxes, I ran 3 parallel attackers in such a swarm architecture, because that’s the number of boxes I could stand up at any given time. Real attackers would only be constrained by their subscription plan limits.

Today we were unlucky, but remember, we only have to be lucky once - you will have to be lucky always

— the Provisional Irish Republican Army

Massive thanks to @hacks4pancakes for their help in refining the ICS terminology in this post via discussion on bluesky. All errors are mine.

[Read More]